The data center ecosystem is evolving and experiencing growth at all levels with activity focused heavily in the cloud, at the edge of the network, and increasingly, in cloud resources at the edge. Those old IT closets aren’t extinct, but they’re rapidly becoming a relic of the early rush to the edge.

When we surveyed data center professionals in 2019 as part of our Data Center 2025 update, more than half of those with edge sites said they expected to double those sites by 2025, and 1 in 5 predicted an increase of 400% or more. Now, as we reach the halfway mark on the road to 2025, a new survey indicates those ambitious forecasts were well-founded.

The latest research, which I encourage everyone to read for themselves, reinforces the 2019 projections and paints a picture of an industry simultaneously growing and changing to support an insatiable demand for computing, especially at the edge.

Survey participants expect the edge component of total compute to increase from 21% to 27% over the next four years and the public cloud share — which increasingly includes cloud resources at the edge — to grow from 19% to 25%. Not surprisingly, this mirrors a continued shift away from centralized, on-premises computing, which is projected to decline from 45% of total compute to 35%.

Dig a little deeper and we start to see not just a changing compute profile and growing edge component, but some significant changes to those individual edge sites. Simply put, they’re getting bigger, and they’re consuming more power. According to our survey, 42% of all edge sites have at least five racks with 13% housing more than 20 and 14% requiring more than 200 kilowatts (kW).

The need to reduce latency or minimize bandwidth consumption by pushing computing closer to the user drove the initial shift to the edge and is driving these changes, but the race to the edge has resulted in an inconsistent approach to these deployments. These are increasingly sophisticated sites performing high-volume, advanced computing, too often stitched together almost as an afterthought.

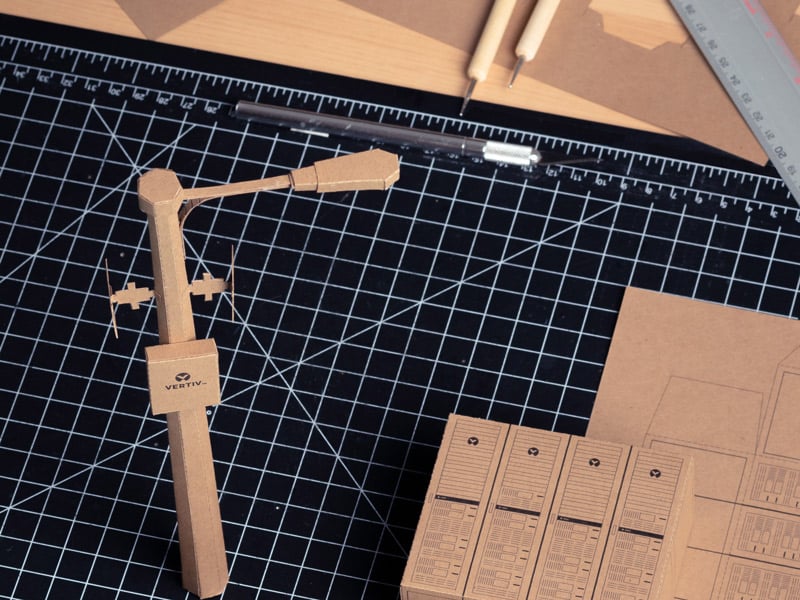

That’s why we developed the Edge Archetypes in 2016, categorizing edge deployments by use case and why we went a step further last year with Edge Archetypes 2.0. The new report introduced deployment-ready edge infrastructure models that bring a standardized approach to edge deployments. This level of normalization across defined edge categories didn’t exist previously. It delivers an important tool to help organizations deploy edge sites faster, reduce costs, and operate more efficiently. The four edge infrastructure models are Device Edge, Micro Edge, Distributed Edge Data Center, and Regional Edge Data Center.

Using these models as a starting point, we can quickly configure the broad strokes of the infrastructure needed to support any proposed edge site. This is critical to bringing the resiliency of those sites in line with their increasing criticality — a gap that is dangerously wide today.

How wide? About half of those responding to the latest survey say their edge sites have a level of resiliency consistent with the Uptime Institute’s Tier I or II classification — the lowest levels of resiliency. Likewise, more than 90% of sites are using at least 2 kW of power, which is the threshold at which dedicated IT cooling is recommended, but only 39% are using purpose-built IT cooling systems. Ironically, these conditions reveal increased risk levels at edge locations already challenged by on-site technical expertise that is limited or non-existent.

Our models consider use case, location, number of racks, power requirements and availability, number of tenants, external environment, passive infrastructure, edge infrastructure provider, and number of sites to be deployed among many other factors to categorize a potential deployment. At that point, we can match it to a standardized infrastructure that can be tailored to meet the operator’s specific needs. If you’re curious about your own edge sites, we’ve developed an online tool to help.

Once we understand (1) the IT functionality and characteristics each site must support; (2) the physical footprint of the edge network; and (3) the infrastructure attributes required of each deployment, we can configure, build, and deploy exactly what is needed faster and more efficiently while minimizing time on site for installation and service. It’s a massive leap forward in edge design and deployment.

Today’s edge is more sophisticated, critical, and complex than ever before. By applying a systematic approach to site analysis, we can introduce customized standardization to the edge. This will reduce deployment times and costs, increase efficiency and uptime, and deliver our customers (and their customers) the seamless network experience they expect.

How would your organization benefit from a more standardized approach to edge deployment?